I Made AI Make Me a Game in 14 Days: Here's How

April 23, 2025

It started, as these things sometimes do, with a link from a friend. It ended with … well, this playable something you can try, right here.

This post is about how that went: the successes, the bumps, and what it’s really like making AI make a game.

Part 0: Making Myself Get Into A Project

Right, so there is this guy called Pieter Levels. You might have seen his vibe coded flight simulator thing - the one that kinda blew up and made an ungodly amount of money, which, you know, hats off. My friend sent me the link, we had a laugh about how replicating that luck (or skill) wasn’t going to happen for us.

So of course a few days later Pieter announces a Vibe Coding competition. He basically left me no choice. I had to drop what I was doing and figure out how to build a WebGL game, runnable on mobile, in about two weeks (starting late, naturally).

Now, if I was going to do this, I was going to win. Narrator: He was not going to win.

But this was also about seeing how far I could take this whole vibe coding nonsense. I’ve come to believe pretty strongly that AI isn’t just some tool, and vibe coding isn’t for toy projects only. I wanted to make something that feels more engineered, something that feels like a real game.

Here is something I think about often: Seeing AI as a tool is mostly not keeping up with what it can do, and seeing it as a person or entity misses the point.

I think of it more like a basic resource - raw intelligence. More like water or sand. You can get more or less of it, it has different quality, and your job is to build the setup to use that intelligence well. Consider the term Artificial Intelligence itself - you can focus on the Artificial part, treating it like a distinct person or thing. Or, you can focus on the Intelligence - like it’s a substance. I think of it like artificial sand: a pile of stuff you can shape and build with, with more or less of it available depending on the source.

This jam wasn’t starting from zero for me. I’d already spent months building AI workflows, tools, and prompt tricks. So I had a lot of the groundwork ready - the setup to channel that intelligence. You could say I started this game months ago, by building that setup.

My plan for the jam was simple: shove as much AI as possible into every orifice and hope for the best.

Part 1: Making AI Make Ideas

To start, you need ideas. So, first task: throw AI at the problem. I used ChatGPT 4.5 for the creative brainstorming.

The speed was surprising. In its second reply, the first idea it listed was “Event Horizon - Black Hole Arena.” It wasn’t just any idea; it felt right. Dynamic, unique.

Core idea sorted. I started building a prototype. Later, needing more ideas: two more prompts in that same chat, using my standard structure (context dump + concise request), and it gave me the unique mechanics: “quantum stuff” like entanglement and “time echoes.”

Four prompts. It gave me the theme, name (Echoes), and core ideas almost instantly.

Part 2: Making AI Make Your Code

You can’t just spray intelligence everywhere and hope for the best. Using AI well needs structure - building the pipes for that ‘artificial sand’. My workflow is more and more about making things AI-readable.

-

The AI-Readable Context Bible: A Project Requirements Document (mostly AI-written) was the master plan. It had everything: jam goals, design ideas, tech choices, file structure. In Cursor, I set this as an ‘Always’ rule, so the AI saw it in every prompt.

-

Planning Prompts (aka Wrangling the Flow): Before coding any feature, I’d prompt for a plan:

Lets work on a new feature, here are my unstructured thoughts: [Feature Description / Goal / My Rough Thoughts - often dictated] ---- Write an implementation plan for the above. Do not write any code.I started using “Do not write any code” mainly to handle Claude 3.7’s character. That stuff was always going off the rails. It would list its big plan, and I could pick the good steps (usually 1-3) and ditch the rest before it wrote tons of code I didn’t need. (You say: “Great lets implement steps 1 and 2 and then stop for testing and review.”)

Even with the better-behaved Gemini 2.5 Pro, I still use this trick. Why? It checks if the AI got the right context. If the plan looks wrong, it usually means my prompt was unclear or missed something. This lets me fix it before the AI goes the wrong way. Doing that is much easier than trying to shove it back onto the right path.

Here is the fourth prompt I used to get the game design ideas. To get AI to be more creative it helps to tell it to be really creative.

Part 3: Making Use Of Different AI Flavours

Different AI models aren’t just interfaces. They’re different sources of intelligence. Each has its own power and character. Benchmarks don’t show you how it feels to work with them, the hidden quirks of the intelligence stream. You have to use them to learn their quirks.

Claude 3.7: My first source. The intelligence is strong, definitely smart. But its character? This stuff was wild. It constantly tried to do more, refactor big chunks, show off with complex patterns. Working with it felt like managing a hyperactive junior dev keen to impress by over-engineering. A lot of effort went into directing its energy and cleaning up the mess.

Google Gemini 2.5 Pro: Switching mid-jam felt like tapping a different source. Gemini’s character is more… practical. It listened to instructions better. It did the planned step, then stopped. It felt less like wrestling a firehose and more like watering the garden. (there were some Cursor-related bugs with the integration, but even with those I still found it better).

Gemini’s practical style also built trust. (Not that you should ever trust AI) When it suggested keeping things simple instead of adding complexity, it felt sensible, like a solid mid-level dev. The tokens it was spitting out seemed to have a more grounded character. AI isn’t senior dev material yet, but the character of the intelligence felt more mature. Understanding these personalities helps you direct them well.

Part 4: Making AI Solve Harder Problems

Here is something important: AI intelligence per prompt isn’t infinite. Complex tasks or unfocused prompts deplete this finite resource quickly, making the AI fail. Much of effective AI use is about managing this flow - preventing leaks and focusing on what you want solved. There is a metaphor for life in there somewhere.

An example of sectioning it out was me having AI write a grid shader in HLSL: Asking the AI to generate the whole complex thing at once failed when I tried it - too much intelligence needed for one burst. Instead, I broke it down into smaller steps: “Implement basic color,” “Now add UV warping,” “Now add grid lines.” Each prompt needed only a little intelligence, allowing it to succeed. This shows why “fix this bug” can be hard: Without sufficient context scaffolding, the required intelligence might exceed what’s available in that prompt cycle. Detailed prompts work better because they preprocess the problem, reducing the intelligence needed from the AI itself.

A related issue arose with animation libraries. I used PrimeTween, less common than DOTween. The AI consistently defaulted to DOTween syntax. Why? Assuming the common tool requires less intelligence than adapting to a niche one. To get the right output, I had to explicitly add PrimeTween syntax to the context. This saved the intelligence for writing the code, rather than spending it on figuring out what libraries I was using, while also writing the code.

Even setting up a virtual machine on Google Compute for the first time, a process filled with painful adventures, eventually yielded to this approach. When stuck, I’d go back, edit my prompts to include logs of failed attempts and specific error messages, forcing the AI to use its intelligence on new paths instead of repeating failures.

Check out this example, where I’m trying to figure out why my game loads on some browsers and not others:

I've set up a domain and I have my virtual machine be using that domain through a DNS record and I can surf pages through it and it all works over http. However, with HTTPS it works for most browsers, but not for Firefox. So can we try and figure out why that's happening and what's going on there?

Heres the error i get on firefox:

<<A whole bunch of logs from firefox, then more logs from brave, then more logs from firefox showing the case where they do work>>

Heres the site index and html im serving (before its parsed by unity to insert the right stuff in there like game name ect):

@index.html @style.css

I've looked into compression methods on Cloudflare and there's no release setting to disable compression that I could find. It's also interesting that the content encoding headers are missing on Firefox through HTTPS, but not on Brave through HTTPS. I have tried clearing the cache and even making a rule that bypasses the cache, but that doesn't seem to help. infact disabling the cash breaks it on the other browsers where they get an undefined error (index):52 Uncaught ReferenceError: createUnityInstance is not defined

at (index):52:7

----

can you help figure out what we need to do to fix this problem and go from there

This structure focuses the finite intelligence by providing clear problem definition, specific data, comparisons, relevant code, and a history of failed attempts.

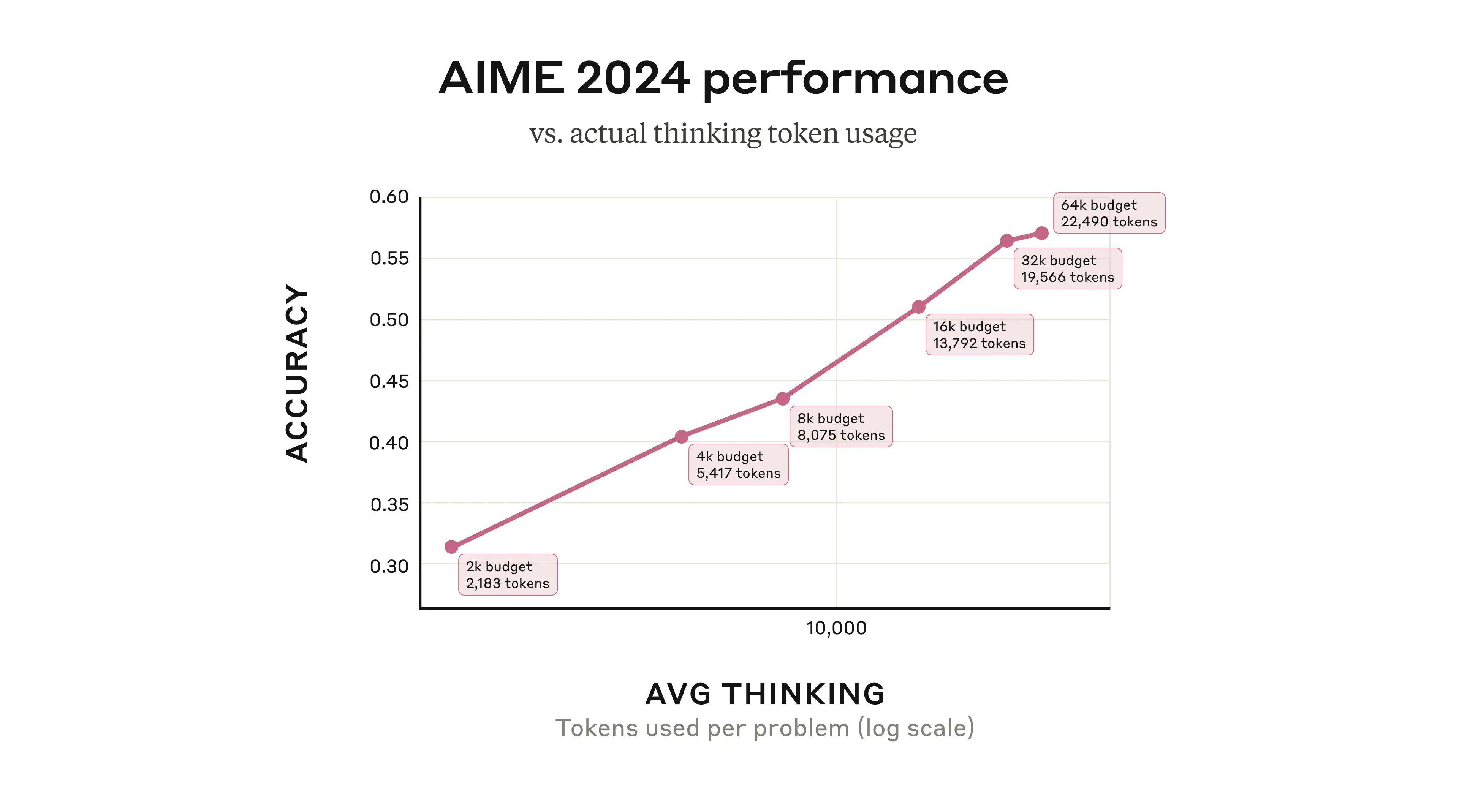

This idea of intelligence as a controllable resource isn’t just a metaphor. Research by Anthropic, for example, shows a direct correlation between giving models more ‘thinking time’ (computation) and their ability to solve complex problems accurately, as seen in their graph below from the ‘Visible Thinking’ work. Some models even offer API controls for this ‘thinking time’, literally giving you a dial to control how much intelligence you use per prompt.

Part 5: Making AI Make Polish

Obviously, polish is also something I threw AI at. ChatGPT 4.5 generated all the in-game text, not that there was much of it.

The results were good - consistent with the game’s tone and definitely saved me time. When I asked for some sample phrases for one part of the game, I liked all the suggestions so much that instead of picking one, I incorporated them all, randomized. The AI’s output actually made that bit better than what I was planning. If you play through the game to the very end, you can check for yourself.

I’m not a great writer by any means, but if you play through the game to the very end, I think it did a better job than me, actually.

Conclusion: Stop Thinking ‘Tool’, Start Being The Sand Whisperer

So, did AI co-develop this game? That question feels increasingly irrelevant. AI isn’t just a tool anymore. It’s a basic resource - intelligence itself. That artificial sand we can shape.

My role felt less like a traditional developer and more like a Landscaper or Sand Whisperer, perhaps. I built the setup, defined the channels for the AI sand, picked the results, and sometimes smoothed the edges. But the AI did all the work across design, code, infrastructure, and writing.

The things AI can build alone are getting bigger, fast. The important skills are changing: designing AI-readable systems, getting good at creating effective contexts, developing taste to pick AI results, and building the solid setup needed to manage the flow.

It’s not a question of whether you should use AI. It’s how well you can use this powerful new resource. For me, this jam showed building around AI, making that intelligence go through every part of creation, isn’t just possible - it’s a mistake not to.

The bottom line is this - Look: If we’re trying to build a Sand God, we might as well think of it as sand, alright?

Enjoyed this? Get occasional updates: